Adapting the HoloLens OS designs to VR headsets for Windows Mixed Reality presented new challenges. I worked with the design team to port our HoloLens OS to a new engine for the RS4 update, and designed a templating system that allowed us to scale assets across both platforms.

On top of being responsible for the design and integration of our controller teleportation UX, I created the realtime assets for our motion controllers.

The motion controller assets I created are widely distributed. They exist in the MR shell as well as our cross-platform toolkits and tutorials.

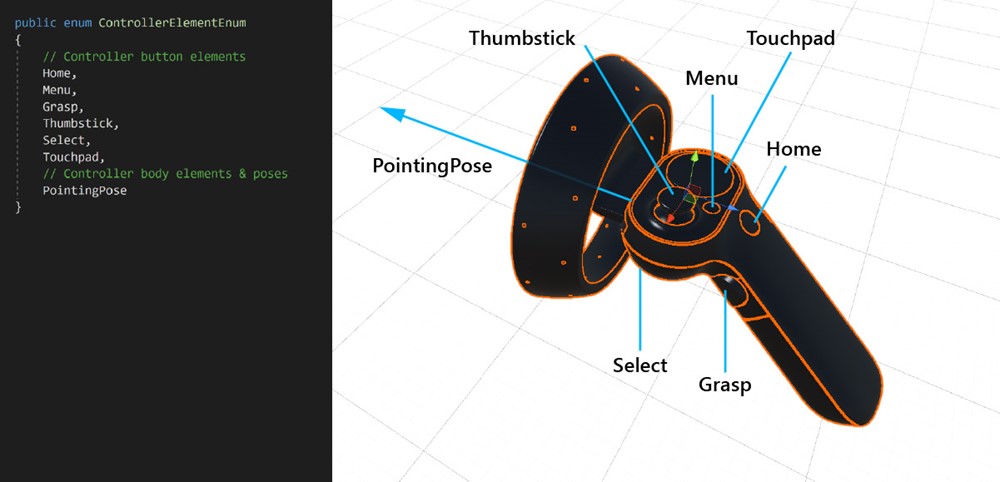

The hierarchy of the asset itself also contains the blueprints for various functionality. I partnered with engineers to design a framework for controller animation and attach points managed by device drivers at the API levels.

Built into the asset are transforms that describe button/joystick/touchpad articulation, label/UI attach points, and even the correct pointing pose relative to the model, guaranteeing that it is always rendered in an accurate position.

Because VR is not bound by the limits of the real world, the device is rendered with a bit of magic: the top ring is not attached to the body, and it animates to accentuate the user’s teleport and dash inputs.

The virtual controller also glows in alignment with the teleport arc visuals, indicating whether or not the teleportation target is valid.

This video also demonstrates a fun experiment: to explore adding more human presence to MR, I articulated a pair of hands using the direct inputs from the motion controller drivers.

I also worked on vision concepts and demos that explored the future of Mixed Reality and HoloLens. I designed the virtual assistant character seen in this vision video for a real functioning demo created for Executive and Board review at Microsoft.

Powered by functioning Microsoft Cognitive services, this bot was able to respond to speech input with its own speech and emotional states.

Working with concept artist Jarod Erwin, I designed the character and created its animations for talking, listening, and emotional states. It’s able to locomote in the scene and look around at different demo participants.

I created the shader for its bubble, which reinforces its emotional states through color and tangible appearance. When the bot is sad, the bubble is watery; when it’s angry, the bubble hardens into a vibrating shell.